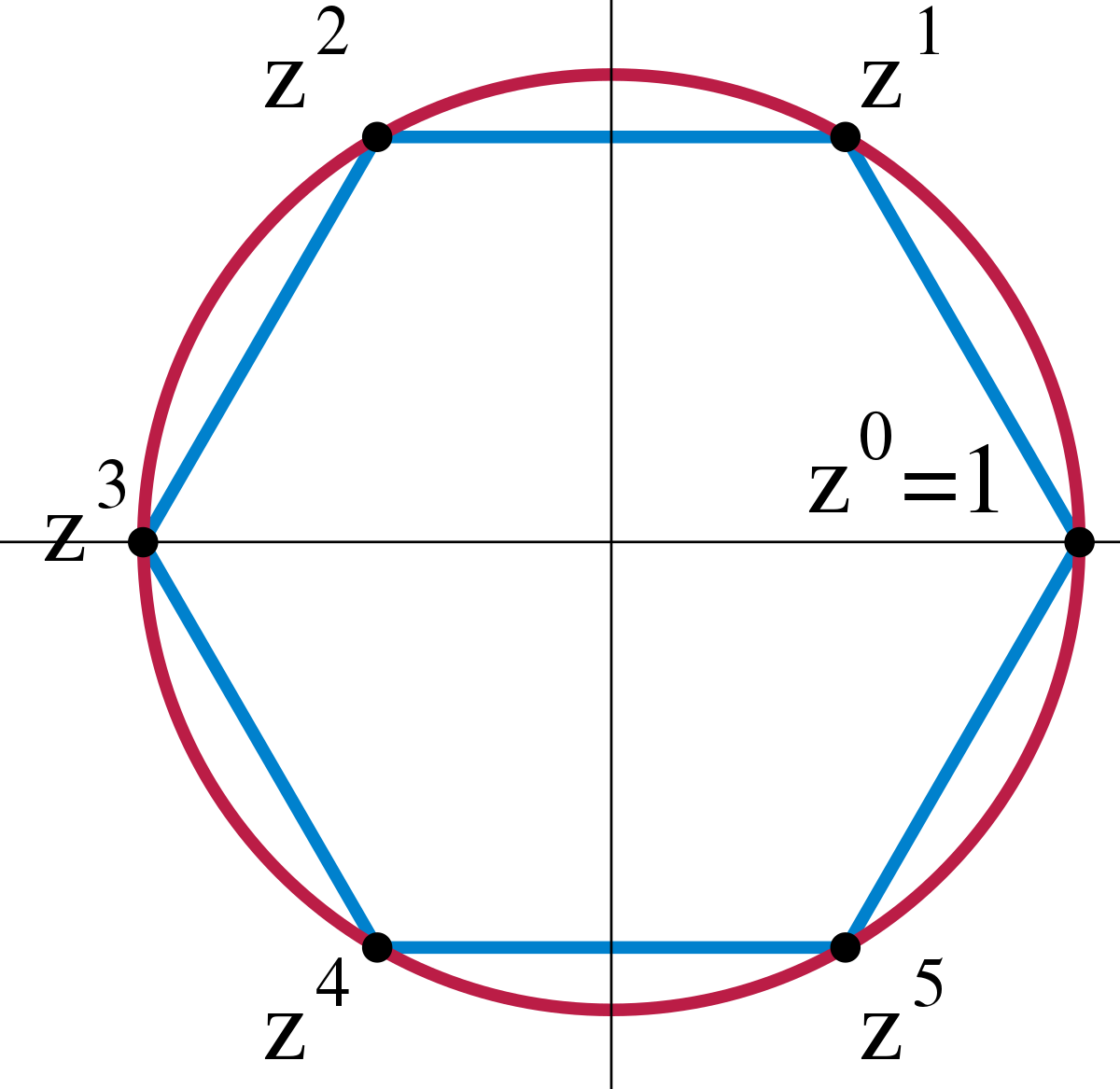

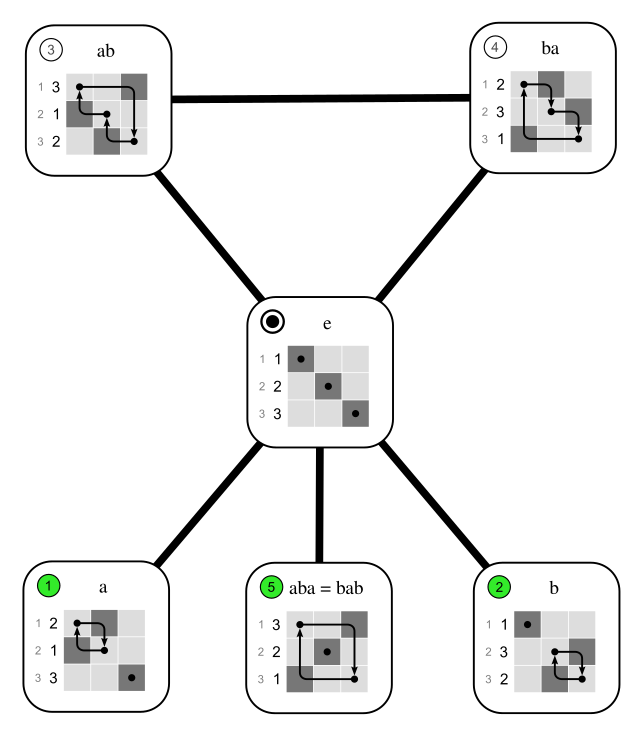

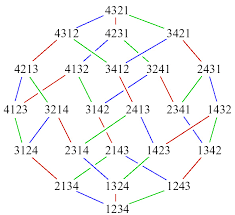

This page is about the symmetric group. For the cyclic group or the dihedral group click on the associated picture below.

Everything is based on fabulous work of Joel Gibson related to Joel's

Lievis. See also some slides.

For a nice illustration, and much more, why linear maps do not go very well with neural networks see here.

RelU from the standard representation to the trivial representation

Take the \(n\) dimensional polynomial representation \(P_{n}\) of the the symmetric group \(S_{n}\). Over the real numbers

(and actually over any field of characteristic zero) we can decompose it into the trivial and standard \(S_{n}\)-representations:

\[

P_{n}\cong L_{(n)}\oplus L_{(n-1,1)}.

\]

The standard representation \(L_{(n-1,1)}\) of the symmetric group \(S_{n}\) is \((n-1)\) dimensional, so

we can think of the ReLU map obtained from

\[

L_{(n-1,1)}\xrightarrow{include}R\xrightarrow{\hspace{0.5cm}ReLU\hspace{0.5cm}}R\xrightarrow{project}L_{(n)}

\]

as a map to \(\mathbb{R}\) with \((n-1)\) parameters. Fixing all but two parameters to a real number gives the maps

illustrated below for \(n=4\) (first) and \(n=7\) (second).

The \(n=4\) is animated, varying the remaining parameter, while you can vary the parameters for the \(n=7\) yourself.